Ever wondered what happens when you type a URL into your browser and hit Enter? It’s not just a simple “request sent, response received” story. The journey involves DNS resolution, TCP handshakes, TLS encryption, caching, and more. In this blog, we’ll break down the HTTP request lifecycle, explain each step in a reader-friendly way, and use a real-world analogy to make it crystal clear. Let’s follow the path from your browser to the server and back!

DNS Resolution: Finding the Server’s Address

The journey begins when you enter a URL like https://furkanbaytekin.dev. Your browser needs to find the server’s IP address to send the request. This is where DNS (Domain Name System) resolution comes in:

-

The browser checks its cache for the IP address of

furkanbaytekin.dev. - If not found, it queries the operating system’s DNS cache.

- If still unresolved, the request goes to a DNS resolver (often provided by your ISP or services like Google’s 8.8.8.8).

- The resolver queries a series of DNS servers (root, TLD, and authoritative servers) to get the IP address.

- Once resolved, the IP address is cached for future use.

Think of this as looking up a friend’s address in your phone’s contacts before sending a package.

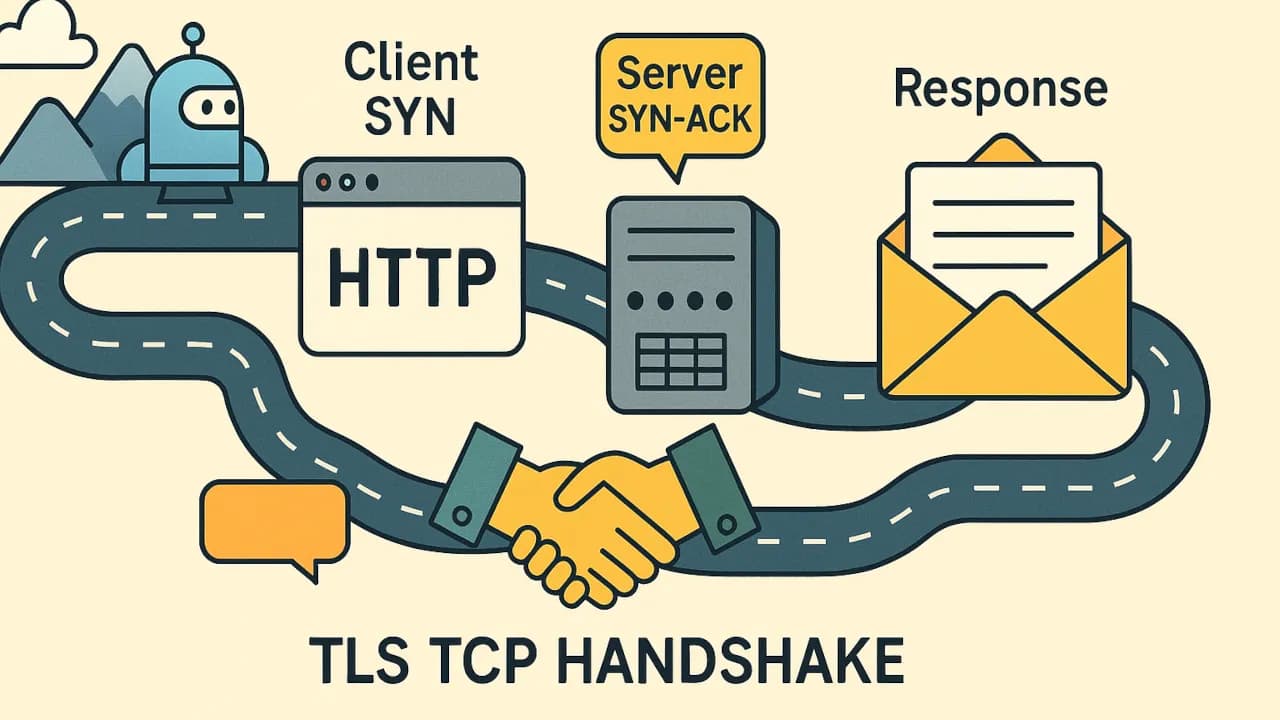

TCP and TLS Handshake: Establishing a Connection

Now that the browser has the server’s IP address, it needs to establish a connection. This involves two key steps: the TCP handshake and, for secure sites, the TLS handshake.

-

TCP Handshake:

- The browser sends a

SYNpacket to the server to initiate a connection. (Hey server, Can you hear me?) - The server responds with a

SYN-ACKpacket. (Yes, I can hear you. Can you hear me?) - The browser sends an

ACKpacket, completing the three-way handshake. (Yes I can hear you too!) - This establishes a reliable TCP connection for data exchange.

- The browser sends a

-

TLS Handshake (for HTTPS):

- The browser and server negotiate encryption protocols and exchange certificates.

- They agree on a session key to encrypt data, ensuring secure communication.

- This adds a layer of security but slightly increases latency.

This is like confirming a secure delivery method with a courier before sending your package.

HTTP Request Formation: Crafting the Message

With the connection established, the browser constructs an HTTP request. This includes:

-

Request Line: Specifies the method (e.g.,

GET), URL, and HTTP version (e.g.,HTTP/1.1orHTTP/2). -

Headers: Provide metadata like

User-Agent,Accept, andConnection: keep-alive. -

Body (optional): Contains data for methods like

POST, such as form submissions.

For example, a simple GET request might look like:

GET /index.html HTTP/1.1

Host: furkanbaytekin.dev

User-Agent: Mozilla/5.0

Accept: text/html

Connection: keep-alive

This is like writing a detailed order form for your package, specifying what you want and how it should be delivered.

Response Journey: Server to Browser

The server receives the request, processes it, and sends a response. Here’s what happens:

-

Server Processing:

- The server routes the request to the appropriate application (e.g., a web server like Nginx or a backend like Node.js).

- It retrieves or generates the requested resource (e.g., an HTML page, image, or API data).

- The server constructs an HTTP response with a status code (e.g.,

200 OK,404 Not Found), headers, and a body.

-

Response Delivery:

- The response travels back over the TCP connection, encrypted if using TLS.

- The browser receives and parses the response, rendering the content or fetching additional resources (e.g., CSS, JavaScript).

This is like the courier delivering your package with a receipt confirming what’s inside.

Browser Cache, CDN, and Reverse Proxy: Speeding Things Up

Several systems optimize the request lifecycle:

-

Browser Cache:

- Stores resources like images and scripts locally.

- If a resource is cached and valid (based on headers like

Cache-Control), the browser skips the request entirely.

-

CDN (Content Delivery Network):

- CDNs like Cloudflare cache content on edge servers closer to the user.

- They reduce latency by serving resources from a nearby location.

-

Reverse Proxy:

- Sits between the client and server, handling tasks like load balancing, caching, or compression.

- It can serve cached responses or route requests to the appropriate backend.

These are like having a local warehouse (cache), a nearby distribution center (CDN), or a logistics manager (reverse proxy) to streamline package delivery.

Real-World Analogy: The Kargo Journey

Let’s tie it all together with a kargo (shipping) analogy:

- Typing the URL: You decide to order a product online and enter the store’s address (URL).

- DNS Resolution: You look up the store’s physical address (IP) in a directory (DNS).

- TCP/TLS Handshake: You call the courier to confirm a secure delivery method (TCP/TLS).

- HTTP Request: You fill out an order form specifying what you want (request).

- Server Response: The store prepares and ships your product with a receipt (response).

- Cache/CDN/Proxy: You check your pantry first (cache), order from a nearby warehouse (CDN), or the courier optimizes the route (reverse proxy).

Just like a well-coordinated kargo delivery, the HTTP request lifecycle ensures your webpage arrives quickly and securely.

Conclusion

The HTTP request lifecycle is a complex but fascinating journey, involving DNS resolution, TCP and TLS handshakes, request formation, server processing, and optimizations like caching and CDNs. By understanding this process, you can optimize your website for speed, improve user experience, and boost SEO. Next time you hit Enter, you’ll know the incredible orchestration happening behind the scenes—like a perfectly executed kargo delivery!

Album of the day: